Stanford Scholars find AI Detectors Unfairly Penalize Non-Native English Speakers

Scholar warns against placing trust in unreliable and easily manipulated detectors.

Study Findings

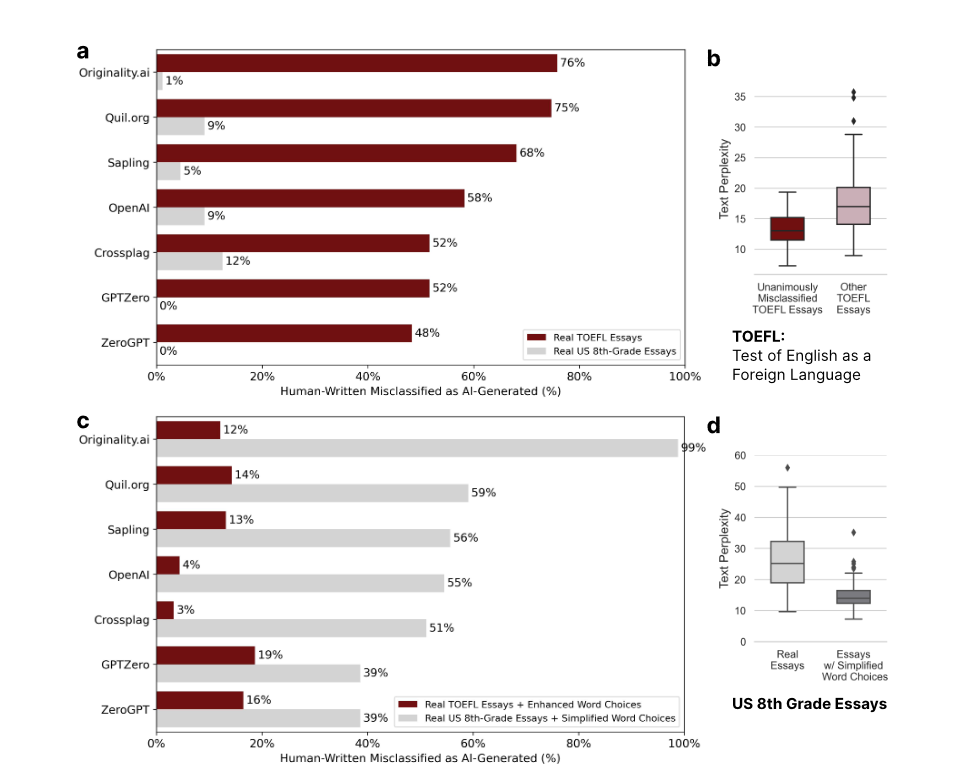

A new study from Stanford scholars has revealed that AI detectors, designed to detect content written by AI, are biased against non-native English writers. The research found that the detectors were near-perfect in evaluating essays written by U.S.-born eighth-graders, but classified more than half of TOEFL essays (61.22%) written by non-native English students as AI-generated. In addition, all seven AI detectors unanimously identified 18 of the 91 TOEFL student essays (19%) as AI-generated and a remarkable 89 of the 91 TOEFL essays (97%) were flagged by at least one of the detectors.

Image: Bias in GPT detectors against non-native English writing samples.

Issue with AI Detectors

The issue lies in how these detectors detect AI; they typically score based on a metric known as ‘perplexity’, which correlates with the sophistication of the writing – something in which non-native speakers are naturally going to trail their U.S.-born counterparts. As such, this raises serious questions about the objectivity of Artificial Intelligence detectors and the potential for foreign-born students and workers to be unfairly accused or penalized for cheating.

Potential Subversion of AI Detectors

The findings of the study have indicated that these detectors can be easily circumvented by the use of prompt engineering, which involves the application of generative AI to rephrase essays in order to incorporate more elaborate vocabulary. This renders them even less dependable and raises further moral quandaries.

Recommendations

Professor James Zou, a senior author of the study and a Professor of Biomedical Data Science at Stanford University, has recommended that we abstain from relying on these detectors in educational settings with a high concentration of non-native English speakers until they have been thoroughly assessed and improved. Additionally, he has proposed that developers move beyond using perplexity as their primary metric and instead implement watermarks in which generative AI embeds subtle indicators of its identity into the content it generates.

Access the complete study by clicking here.